When we try to use Excel Source/Excel Destination as Data Flow component we have to use Excel Connection Manager. The limitations of using Excel Connection Manager is that we have to run SSIS Solution in 32 bit Runtime otherwise we will get below error.

Error: SSIS Error Code DTS_E_CANNOTACQUIRECONNECTIONFROMCONNECTIONMANAGER.

The AcquireConnection method call to the connection manager “” failed with error code 0xC0209303. There may be error messages posted before this with more information on why the AcquireConnection method call failed.

Error: The requested OLE DB provider Microsoft.Jet.OLEDB.4.0 is not registered. If the 64-bit driver is not installed, run the package in 32-bit mode. Error code: 0x00000000.

An OLE DB record is available. Source: “Microsoft OLE DB Service Components” Hresult: 0x80040154 Description: “Class not registered”.

Image:

My findings with Excel Connection Managers are as follows.

Excel Connection Manager works in 64 bit (Run64BitRuntime = True) in SQL SERVER 2012 Integration Services (SSIS 2012).

That’s not true. Many of us believe that in SQL Server 2012 Integration Services Microsoft has fixed the issue to run SSIS Solution in 64-bit using Excel Connection Manager. The reason it is not working because Microsoft provides Microsoft Visual Studio or SQL SERVER Data Tools (SSDT) as 32 bit application. Above solution I have created in SSIS 2012 and it proves that Excel Connection Manager fails if I run my solution in in 64 bit (Run64BitRuntime = True).

What is the alternative?

We believe that Office OLE DB Provider are 32 bit only which not the true case. We can download 64 bit version of Microsoft Access Database Engine 2010 Redistributable or Microsoft Access Database Engine 2013 Redistributable. As mentioned earlier Microsoft Visual Studio or SQL SERVER Data Tools are 32 bit applications only so we cannot use 64 bit OLEDB Providers so, In the development Environment we have to use 32 bit provider but we can use 64-bit Provider in Production.

After I installed Microsoft Access Database Engine 2010 Redistributable 32 bit in my development machine (SSIS 2012) and replaced Microsoft.Jet.OLEDB.4.0 to Microsoft.ACE.OLEDB.12.0. Run the solution in 32 bit (Run64BitRuntime = False) it works fine. After I change Run64BitRuntime = True, Build and Deployed it to Production where I have installed 64-Bit Microsoft Access Database Engine 2010 Redistributable it works fine.

1) Change settings in Excel Connection Manager as Microsoft.Jet.OLEDB.4.0 to Microsoft.ACE.OLEDB.12.0

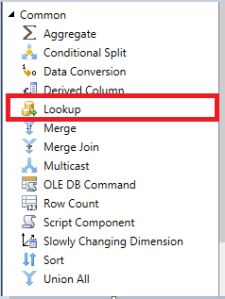

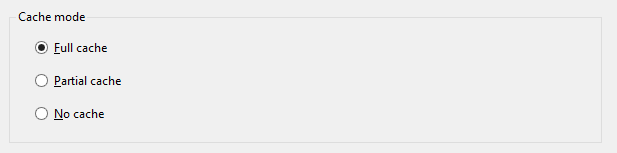

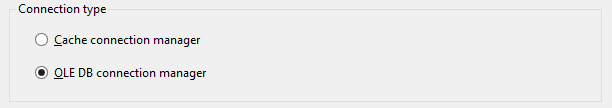

2) Another alternative is we can use OLEDB Source and OLEDB Connection Provider as below.

Change the extended property to Excel 8.0;HDR=YES. It works fine in 32 bit in Development machine and 64 bit in production machine.

If you are connecting to Microsoft Office Excel Data, add the appropriate Extended Properties of the OLEDB Connection String based on the Excel File type:

| File Type (Extension) | Extended Properties. |

| Excel 97-2003 Workbook (.xls) | “Excel 8.0” |

| Excel 2007-2010 Workbook (.xlsx) | “Excel 12.0 Xml” |

| Excel 2007-2010 Macro-enabled workbook (.xlsm) | “Excel 12.0 Macro” |

| Excel 2007-2010 Non-XML binary workbook (.xlsb) | “Excel 12.0” |

There is also a myth that we must have installed Microsoft Office on the box or on the machine or server where we running or the developing the SSIS Solution, that’s not the true case.

Conclusion: It is possible that we can load Microsoft Office Excel Files in 64 bit of SSIS.