Note: This topic applies to Microsoft SQL SERVER 2016.

In my previous Blog Post we have learned about how to implement Temporal Table on a new table? For more information on Temporal table please follow Temporal Table in SQL SERVER 2016.

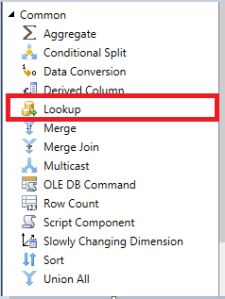

Let’s assume that we have below solution managed via stored Procedure for System Versioning. In this existing solution we have a table called dbo.SalesPerson and if the Sales Person’s region changed then we maintain the history in the table called dbo.SalesPersonHistory.

Step1: First let’s create a Demo Database Called SQL2016Demo.

| USE master Go — Drop database IF DB_ID (‘SQL2016Demo’) IS NOT NULL DROP DATABASE SQL2016Demo; GO CREATE DATABASE SQL2016Demo; |

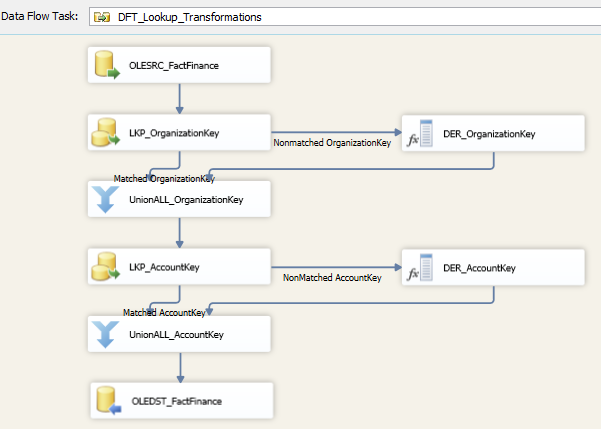

Output: Below Image show that SQL2016Demo Database is created.

Image1:

Step2 : In this step we will create a table called dbo.SalesPerson with an assumptions that each sales person has their sales region and their Sales Region sales over the time, when Business change their sales region we need to keep their Historical Sales Regions in a table called dbo.SalesPersonHistory. Here, in this example we have two columns called RowStartDate and RowEndDate to track the changes for the Sales Region for each SalesPerson over the period of time.

| USE SQL2016Demo; GO CREATE TABLE dbo.SalesPerson ( SalesPersonId Int IDENTITY, SalesPersonName Varchar (50), SalesRegion Varchar (50), RowStartDate date NOT NULL Default GetDate (), RowEndDate date NOT NULL Default ‘2079-12-31’, Constraint PK_SalesPerson_SalesPersonId Primary Key(SalesPersonId) ); GO CREATE TABLE dbo.SalesPersonHistory ( SalesPersonId Int NOT NULL, SalesPersonName Varchar (50), SalesRegion Varchar (50), RowStartDate date NOT NULL, RowEndDate date NOT NULL ); GO –Populate the dbo.SalesPerson Table with some initial data. INSERT dbo.SalesPerson(SalesPersonName , SalesRegion , RowStartDate) Values (‘John Smith’ , ‘Hertfordshire’ , ‘2015-05-01’) , (‘Anthony Webster’ , ‘South West London’, ‘2015-05-01’), (‘Matt Williams’ , ‘Kingston upon Thames’, ‘2015-05-01’), (‘James Jenkinson’ , ‘Croydon’, ‘2015-05-01’), (‘Tom Bailey’ , ‘Slough’, ‘2015-05-01’) GO |

Step3: In this step we are creating a stored procedure to update the Sales Region for the Sales person and keep their historical Sales Region in SalesPersonHistory Table. Please find below T-SQL to create a stored procedure and update the Sales Region for the Sales Person Id 1 and 2.

| USE SQL2016Demo; GO Create Procedure dbo.usp_UpdateSalesPersonRegion(@SalesPersonid INT, @SalesRegion Varchar(50)) As Begin SET NOCOUNT ON; UPDATE dbo.SalesPerson SET SalesRegion = @SalesRegion , RowStartDate = GetDate() OUTPUT inserted.SalesPersonId, inserted.SalesPersonName, deleted.SalesRegion, deleted.RowStartDate, GetDate()-1 as RowEndDate INTO dbo.SalesPersonHistory( SalesPersonId, SalesPersonName, SalesRegion, RowStartDate, RowEndDate) Where SalesPerson.SalesPersonId = @SalesPersonid;SET NOCOUNT OFF; END GO EXEC dbo.usp_UpdateSalesPersonRegion @SalesPersonid = 1, @SalesRegion = ‘North West London’ GO EXEC dbo.usp_UpdateSalesPersonRegion @SalesPersonid = 2, @SalesRegion = ‘Hertfordshire’ |

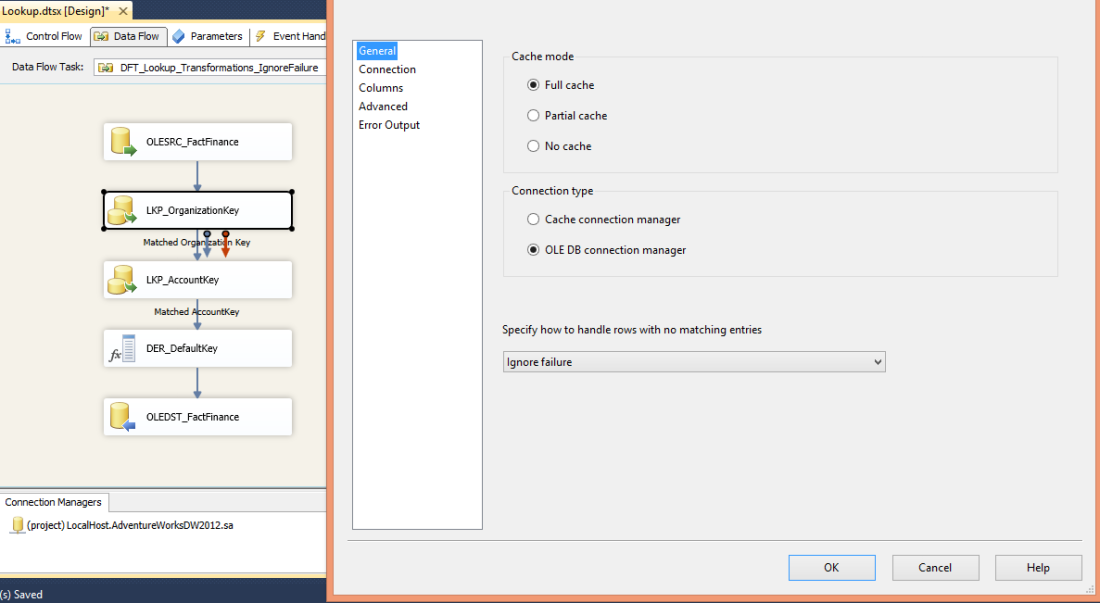

Please find below Image2: which show how the records look like in dbo.SalesPerson and dbo.SalesPersonHistory table.

Image2:

So far, I have implemented a solution via stored procedure which maintains system versioning for the sales person’s historical sales regions in to SalesPersonHistory table. Now we are going to learn how we can migrate this existing solution to system versioning or Temporal Table.

How to Migrate Existing Solution to use System Versioning?

Step1:

The first change we need to do is that in our dbo.SalesPerson table maximum date value for RowEndDate is 2079-12-31 but System versioning required it to be the 9999-12-31 otherwise it will throw below error. So let’s update the RowEndDate to as 9999-12-31.

Error: ADD PERIOD FOR SYSTEM_TIME failed because table ” contains records where end of period is not equal to MAX datetime.

| USE SQL2016Demo; GO UPDATE dbo.SalesPerson SET RowEndDate = CONVERT (DATETIME2, ‘9999-12-31 23:59:59.9999999’) GO |

Step2: Follow below T-SQL code to migrate existing solution to use System Versioning.

| USE SQL2016Demo; GO — Drop existing Stored Procedure, we also need to remove this stored procedure from the calling application. DROP PROCEDURE usp_UpdateSalesPersonRegion; — Adjust the schema for current and history table –Drop the Default Constraint from column RowStartDate and RowEndDate On table dbo.SalesPerson; ALTER TABLE dbo.SalesPerson DROP CONSTRAINT DF_SalesPerson_RowStartDate;ALTER TABLE dbo.SalesPerson DROP CONSTRAINT DF_SalesPerson_RowEndDate;— Change data types for existing period columns ALTER TABLE dbo.SalesPerson ALTER COLUMN [RowStartDate] datetime2 NOT NULL;ALTER TABLE dbo.SalesPerson ALTER COLUMN [RowEndDate] datetime2 NOT NULL; ALTER TABLE dbo.SalesPersonHistory — Add SYSTEM_TIME period and set system versioning with linking two existing tables — (a certain set of data checks happen in the background) ALTER TABLE dbo.SalesPerson ALTER TABLE dbo.SalesPerson |

Related Post

Temporal Table in SQL SERVER 2016

How to Implement Temporal Table on a New Table?